Projects

-

Blogs I Follow By Women In Tech

This is how I assume all women look when they write their dev blogs. I certainly do.

This is how I assume all women look when they write their dev blogs. I certainly do.Recently, a reader wrote in1 and said that she enjoyed my blog because it’s hard to find technical blogs not written by men. I counted up all the blogs I subscribe to in my RSS reader and … yeah, there aren’t a ton.

But I do follow a few women in tech whose writing inspires me. Not all of these blogs are purely tech-focused, and not all of them update frequently, but it costs me nothing to keep their subscriptions in my feed reader, and they all are great reads when they do post.

Check them out, and if you know of great blogs by tech women that are not on this list, get in touch.

- Rach Smith’s Digital Garden

- Lea Verou

- Chelsea Troy

- Cassey Lottman

- Syscily - The Culture Coded Dev

- Julia Evans

- Cassidy Williams

- Maggie Appleton

- Daniela Baron

Again, and I cannot stress this enough – if you know of a blog by a woman or nonbinary engineer, product manager, devrel, etc., I would very much like to know about it!

-

A reader wrote in – I cannot describe how unlikely a sentence I thought this would be to write. And yet, here we are. ↩

-

A Handy Shell Script to Publish Jekyll Drafts

The quest to remove friction from posting to this blog continues. In an earlier post, I shared how I used rake to automatically generate a blog template for me and place it in Jekyll’s drafts folder. Now, I realized I’d also like to handle publishing that post with approximately 10% fewer keystrokes.

I’ll share the script first, then explain my motivations and how it works.

#!/bin/bash PS3="Choose a draft to publish: " select FILENAME in _drafts/*; do today=$(date -I) shortfile=$(basename $FILENAME) mv $FILENAME _posts/$today-$shortfile && echo "Successfully moved" || echo "Had a problem" break done exitThat’s it, that’s literally it, but I’m so excited about it.

Jekyll considers a post to be a draft if it is a markdown file in its

_draftsfolder. It considers it to be published if it is in its_postsfolder. I believe the filename in_postsalso needs to contain the date (e.g.2025-11-19-this-is-a-post.md) but I’m not sure if that’s a hard requirement or just a requirement for my setup.So what I have to do when I publish a new post is

mv _drafts/mydraft.md _posts/yyyy-mm-dd-mycoolpost.mdand that is clearly too many keystrokes, right? Now I just have to writerake publish(I created a rake task that just runs this script), choose my file from a list of files, and I’m done.To write this I had to learn about two new-to-me bash concepts, the

selectconstruct andbasename.The

selectconstructselectcan be used to create (super basic) menus. The man page forselect…..is for the wrong thing! But a good writeup on the select construct can be found here. In short,select thing in listwill pop up a menu that you can interact with by choosing the item number, and assign the value to the variable$thing.You can do:

select option in "BLT" "cheesesteak" "pb&j"and you’ll get the following output:1) BLT 2) cheesesteak 3) pb&j $>In the case of my script, the “in” is the contents of the directory

_drafts/*.(Notice the syntax is not

in ls _drafts/*, implying that we’re not just executing a command and passing the results to the select construct. Which is a mystery for another time.)The upshot, however, is that I get a list like:

1) _drafts/ai-is-hard.md 3) _drafts/microblogging.md 5) _drafts/recipe-buddy-part1.md 2) _drafts/efficient-linux-ch-4.md 4) _drafts/rake-publish.md(oooh, a peek behind the curtain!)

selectwill then allow you to choose one of the numbers, and store the value in the variable we defined (here,$FILENAME.)It will continue to loop until it reaches a

breakcommand, so the break is important in this script. But then all we need to do is get today’s date and rename/move the file to its new home.basenameThis is a lil one, but a handy one. Again, let’s go to the man page for this util:

BASENAME - strip directory and suffix from filenamesDoes what it says on the tin. If you have

/home/user/long/path/to/file.mdand you wantfile.md,basename /home/user/long/path/to/file.mdwill get that for you. Note that it removes the extension if and only if the extension is provided as a second argument, and if the provided extension matches that of the file, which is a little quirky. In my case I want to keep the extension, so this works well for my use case.And there you have it, 10 lines of code that took longer to write about than to write, and which will surely save me

hoursminutesseconds of precious time. Huzzah! -

Are There Any Good AI Documentation Apps Out There?

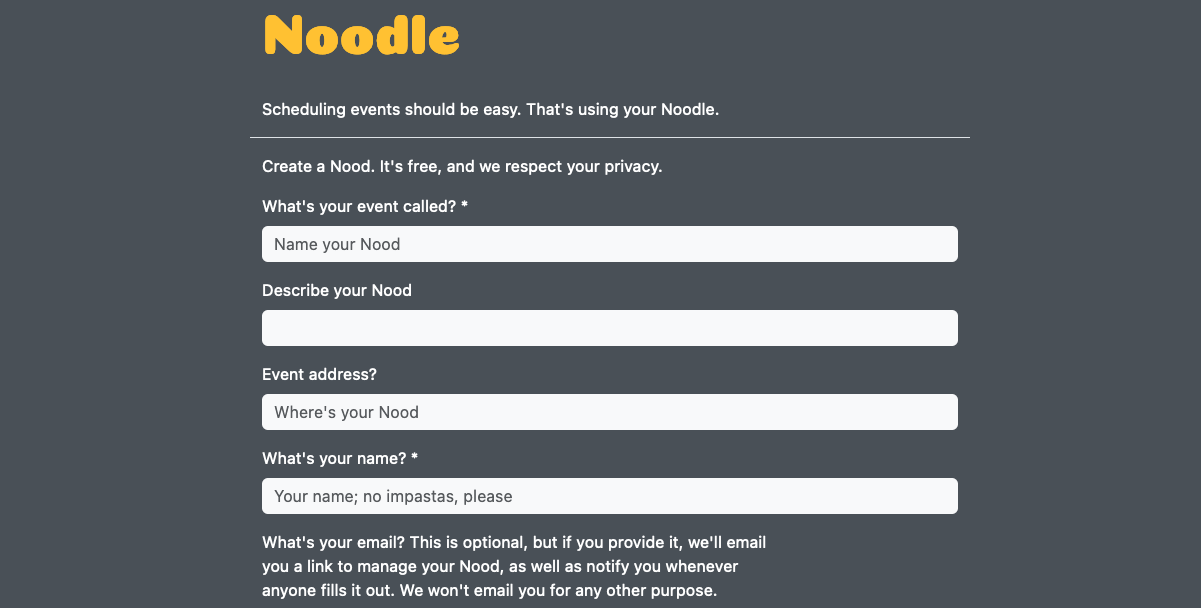

One of the places that I had hoped “AI” could be truly a time-saver is in the realm of creating documentation. There are a number of services out there, including Guidde, Scribe, and Tango, that claim that using their browser extension, you can simply perform a task while the extension records you, and then their software will describe what you did, magically creating a how-to document or video to share with your team.

The promise is amazing, but the reality is .. not so much.

The first limitation of many of these services is that you are limited to actions taken in the browser. If your task requires doing anything outside the browser, you may be out of luck. (I believe Scribe now offers a desktop app, this may be true of others as well.)

Unfortunately, the second limitation of these services is that they are all stupid.

-

From Idea to Acceptance: Crafting a Standout Conference Proposal

Honored to have been a part of this panel on how to get started speaking at tech conferences and meetups – with an emphasis on writing a good proposal – with some very talented and prolific folks. And a big thank you to Joy Hopkins for organizing the panel and having me on.